AI compliance: using artificial intelligence in a legally compliant & responsible manner

AI compliance ensures that you use AI systems in your company in a legally compliant manner and at the same time meet regulatory requirements from the EU AI Act to the GDPR. This article shows you how to systematically establish AI compliance, minimize risks and use AI strategically for more efficient business processes.

AI compliance: the most important points in brief

- AI compliance covers the legally compliant use of AI systems and the use of AI to optimize compliance processes.

- The EU AI Act classifies AI systems according to risk categories. Violations can result in fines of up to 35 million euros.

- AI tools improve compliance through automated monitoring, anomaly detection and intelligent document analysis.

- Systematic AI compliance management requires clear governance structures with interdisciplinary teams and an AI compliance officer.

- Implementation takes place in three phases: Analysis and preparation, strategy development and step-by-step implementation.

- Regular training is essential to keep pace with the rapid development of AI technologies.

What does AI compliance mean?

AI compliance refers to adherence to all legal requirements and ethical standards when using artificial intelligence in a company. It comprises two central aspects: On the one hand, you must develop and use AI systems in a legally compliant manner. On the other hand, you can use AI in a targeted manner to optimize your compliance processes.

Special challenges in AI compliance:

- AI systems often make autonomous decisions that are difficult to understand.

- The so-called "black box problem" (opaque algorithms) makes it difficult to control decision-making processes.

- AI applications often process large amounts of personal data and are subject to additional data protection requirements.

The interfaces with data protection compliance, IT compliance and traditional compliance require an interdisciplinary approach. AI compliance must therefore be integrated into existing governance structures.

Legal challenges posed by AI systems

The regulatory landscape for artificial intelligence is developing rapidly. New laws and guidelines are creating a complex framework that companies need to understand and implement.

EU AI Act: risk-based regulation

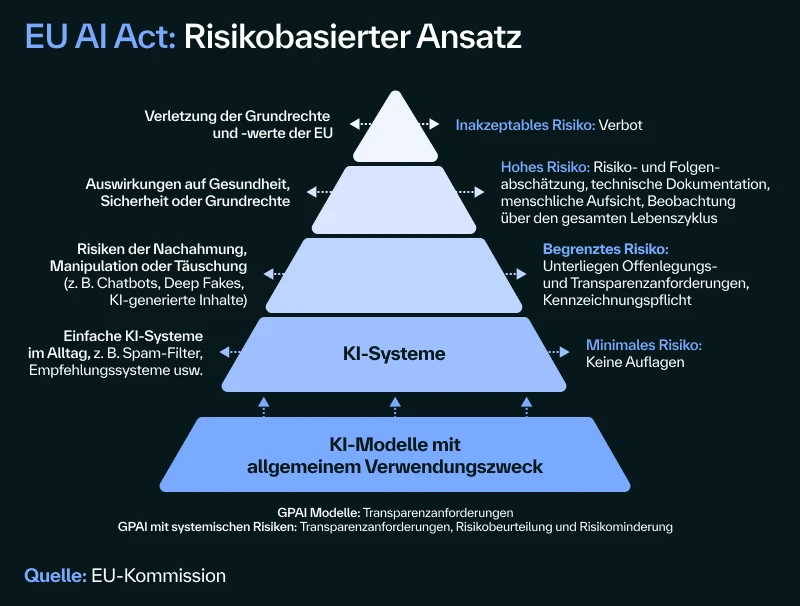

The EU AI Act is the world's first comprehensive law on the regulation of artificial intelligence. It follows a risk-based approach and classifies AI systems into four categories:

- Minimal risk: Only basic employee training requirements apply to these AI applications.

- Limited risk: AI systems such as chatbots must fulfill transparency obligations and inform users about the use of AI.

- High risk: AI systems in critical areas such as human resources, education or law enforcement are subject to extensive compliance obligations. These include risk management systems, data quality standards and human oversight.

- Inacceptablerisk: AI practices such as social scoring or subliminal manipulation are generally prohibited. Real-time biometric identification in public spaces is also subject to strict restrictions.

GPAI systems (General Purpose AI) such as large language models play a special role. They are subject to specific information and transparency rules as well as requirements for codes of conduct.

Sanctions: Violations can result in fines of up to 35 million euros or 7% of annual global turnover - whichever is higher.

GDPR & data protection challenges

The GDPR places specific requirements on AI systems that process personal data:

- Automated decisions: Profiling procedures are only permitted under certain conditions.

- Rights of data subjects: Data subjects have the right to be informed about the logic of automated decisions and the right to object.

- Transparency: AI algorithms must be comprehensible and explainable.

- Data use: Principles such as data minimization and purpose limitation also apply to AI training.

- Data protection impact assessment: This is mandatory for AI projects with a high risk for data subjects.

Include data protection impact assessments in your AI projects at an early stage and document all processing steps in a traceable manner.

Further compliance risks

In addition to the GDPR and the EU AI Act, AI systems pose further legal challenges:

- Liability issues: The general liability rules of the German Civil Code and the Product Liability Act currently apply. The new EU Product Liability Directive must be transposed into German law by 2026 and also covers software and AI systems.

- Copyright: Training data must be legally acquired and must not infringe any copyrights.

- Business secrets: Confidential information must be protected during AI training and deployment.

- Industry-specific regulations: Additional requirements apply in certain sectors such as financial services, healthcare or automotive.

Define clear responsibilities today and review appropriate insurance solutions for AI-related risks.

AI as an opportunity for more efficient compliance processes

While AI creates new compliance challenges, it also offers numerous opportunities to improve compliance processes. Intelligent automation can significantly increase the efficiency, quality and speed of response of your compliance measures.

Automation & increased efficiency

AI tools revolutionize compliance processes through intelligent automation:

- Automated compliance monitoring: continuous monitoring of guidelines in real time

- Anomaly detection: machine learning identifies unusual patterns and potential violations

- Intelligent Document Review: automatic analysis of contracts and compliance documents

- Compliance chatbots: answer employee questions around the clock and take the pressure off teams

- Automatic data redaction: anonymization of personal data in seconds

This automation significantly reduces the manual audit effort and enables more consistent compliance assessments.

Risk management & reporting

AI improves strategic compliance functions:

- Predictive analytics: analyzes historical data and identifies patterns for future compliance risks

- AI-supported due diligence: automatic verification of business partners by searching public databases and sanctions lists

- Early warning systems: continuous monitoring of regulatory changes with intelligent filters

- Automated reporting: real-time creation of compliance reports from different systems

- Intelligent prioritization: automatic assessment of risks and urgencies for optimal resource allocation

This allows you to take preventative measures before breaches occur and your teams can focus on the most important tasks.

Systematically establishing AI compliance management

An effective AI compliance system is based on clear structures and proven building blocks. The complexity of AI systems and regulatory requirements makes a holistic approach indispensable.

Governance structures: The organizational foundations

AI compliance only works as a team:

- Interdisciplinary teams: cooperation between representatives from compliance, data protection, IT, legal and HR

- AI compliance representative: coordinates activities and acts as a central point of contact for AI compliance issues

- AI Ethics Committee: prepares strategic decisions and defines ethical guidelines for complex organizations

- Integration: Integrate AI compliance into existing compliance management systems instead of setting up parallel structures

The role of the AI compliance officer requires both technical understanding and legal expertise. This person has an overview of the various areas of law, from the GDPR to industry-specific regulations.

The most important areas of action

Successful AI compliance rests on four central pillars:

- Transparency & explainability: Explainable AI technologies make AI decisions comprehensible. Documented decision-making processes solve the black box problem and create trust among users and supervisory authorities.

- Non-discrimination & fairness: Procedures for avoiding bias in algorithms and training data are fundamental. Regular tests for discriminatory effects and corresponding corrective measures are standard.

- Data security & quality: High quality standards for training data and its traceable origin form the foundation. Protective measures against unauthorized access and misuse of AI systems are essential.

- Monitoring & audits: Continuous monitoring enables the early detection of compliance violations. Regular audits and their documentation create legal certainty and support compliance with all relevant regulations.

Standards & guidance

ISO 42001 for AI management systems provides a proven framework for quality assurance and structured implementation of AI governance. This international standard helps companies to systematically manage AI risks and meet compliance requirements.

In addition, industry-specific guidelines and best practices support the design of a company-specific AI compliance system. Orientation towards established standards also facilitates communication with supervisory authorities and business partners.

Practical guide: Implementing AI compliance step by step

With this three-phase plan for implementing AI compliance, you can systematically achieve your goal and save resources in the process:

Phase 1: Analysis & preparation

Basic steps for starting a project:

- Create AI inventory: complete coverage of all AI applications, including embedded algorithms in standard software

- Forming a project team: interdisciplinary team of compliance, data protection, IT and legal experts with clear roles

- Carry out a gap analysis: Identify current compliance gaps and prioritize by risk and effort

- Identify quick wins: Measures with quick implementation and low effort for initial successes

Phase 2: Strategy & guidelines

Development of the strategic foundations:

- AI compliance strategy: comprehensive strategy based on business objectives, with defensive and offensive aspects

- Company-specific guidelines: specific instructions for various application scenarios, taking into account all relevant areas of law

- Tool evaluation: Assessment of specialized AI compliance solutions in a rapidly evolving market

- Change management: concept for more acceptance and less resistance to new processes

Phase 3: Implementation & rollout

Practical implementation and introduction:

- Start pilot project: test with selected AI systems to gather experience and refine processes

- Step-by-step implementation: developed processes and workflows with sufficient training and support for everyone involved

- Establish monitoring: Control mechanisms for monitoring effectiveness with clear key figures and reporting channels

- Continuous improvement: system for measuring success and regular adjustments in the dynamic AI compliance field

AI compliance training: Build up targeted knowledge

The complexity and rapid development of AI technologies and regulations make continuous training indispensable. Targeted compliance training courses create the necessary awareness and expertise for the legally compliant use of AI:

- Executives need a strategic understanding of AI risks and opportunities to make informed investment decisions.

- Compliance teams need detailed knowledge of the legal requirements and their practical implementation.

- developers developers need to understand the technical measures to ensure compliance.

- users should be informed about the responsible use of AI.

Training content includes legal basics (EU AI Act, GDPR), practical implementation steps, available tools and best practices from other companies. Combine theoretical knowledge with practical exercises and case studies.

Haufe Akademie: Holistic compliance solutions

As an experienced partner for compliance training, we understand the challenges of digital transformation. Haufe Akademie 's Compliance College offers an integrated platform that combines AI compliance with the areas of data protection, IT security and occupational health and safety.

Get to know Compliance College

FAQ

How can artificial intelligence be used in compliance?

AI is revolutionizing compliance processes through automated monitoring, anomaly detection, intelligent document analysis and risk assessment. Chatbots answer employee questions, while predictive analytics identifies potential compliance risks at an early stage. This leads to more efficient, more consistent and better quality compliance measures.

Which laws are relevant for AI compliance?

The most important regulations are the EU AI Act, the GDPR, national data protection laws and industry-specific regulations. Depending on the area of application, other laws such as employment law, product liability law or financial market regulation may also be relevant. The legal situation is developing rapidly.

Who is responsible for AI compliance in the company?

An AI representative representative often coordinates the activities between the compliance, data protection, IT and legal departments. The management bears overall responsibility and must provide the necessary resources. AI compliance is an interdisciplinary task: successful implementation requires cooperation between all departments.

What are the risks of AI compliance?

Key risks include discrimination through biased algorithms, data protection violations, a lack of transparency in AI decisions, liability issues in the event of errors and breaches of industry-specific regulations. Without appropriate AI compliance, there is a risk of fines of up to 35 million euros or 7% of annual global turnover as well as significant reputational damage.

You might also be interested in